Google AI Threatens Website with Demonetization. What's Next?

When did the corporate state ever want less control?

This has a combo of subjects, none of them good: AI, eager Big Tech censorship of political writing, and the heavy hammer of Google demonetization used against alternate news and discussion sites.

This comes on top of the recent TikTok bill passing the House, a bill that gives the government vast new power of control over … wait for it … websites, not just social media apps like TikTok. Bet you didn’t hear that on MSNBC.

If you’re one who believes that the modern American State is a public-private partnership, a tech-media-finance-NatSec-pol party mashup, your world view just got darker. And vindicated.

Google AI Threatens Site with Demonetization by Mis-Categorizing Posts

We can start here, with Yves Smith’s economics site Naked Capitalism. (Disclosure: Smith frequently picks up posts from yours truly for publication there.)

Here’s the original demand. It came on March 4 from the site’s ad service in response to a complaint from Google. This is believed to be Google’s complaint threatening demonetization, passed on as is (emphasis mine):

Hope you are doing well!

We noticed that Google has flagged your site for Policy violation and ad serving is restricted on most of the pages with the below strikes:

VIOLENT_EXTREMISM,

HATEFUL_CONTENT,

HARMFUL_HEALTH_CLAIMS,

ANTI_VACCINATION

Here are few Google support articles that should be handy:

Policy issues and Ad serving statuses

I’ve listed the page URLs in a report and attached it to the email. I request you to review the page content and fix the existing policy issues flagged. If Google identifies the flags consistently and if the content is not fixed, then the ads will be disabled completely to serve on the site. Also, please ensure that the new content is in compliance with the Google policies.

Let me know if you have any questions.

Thanks & Regards,

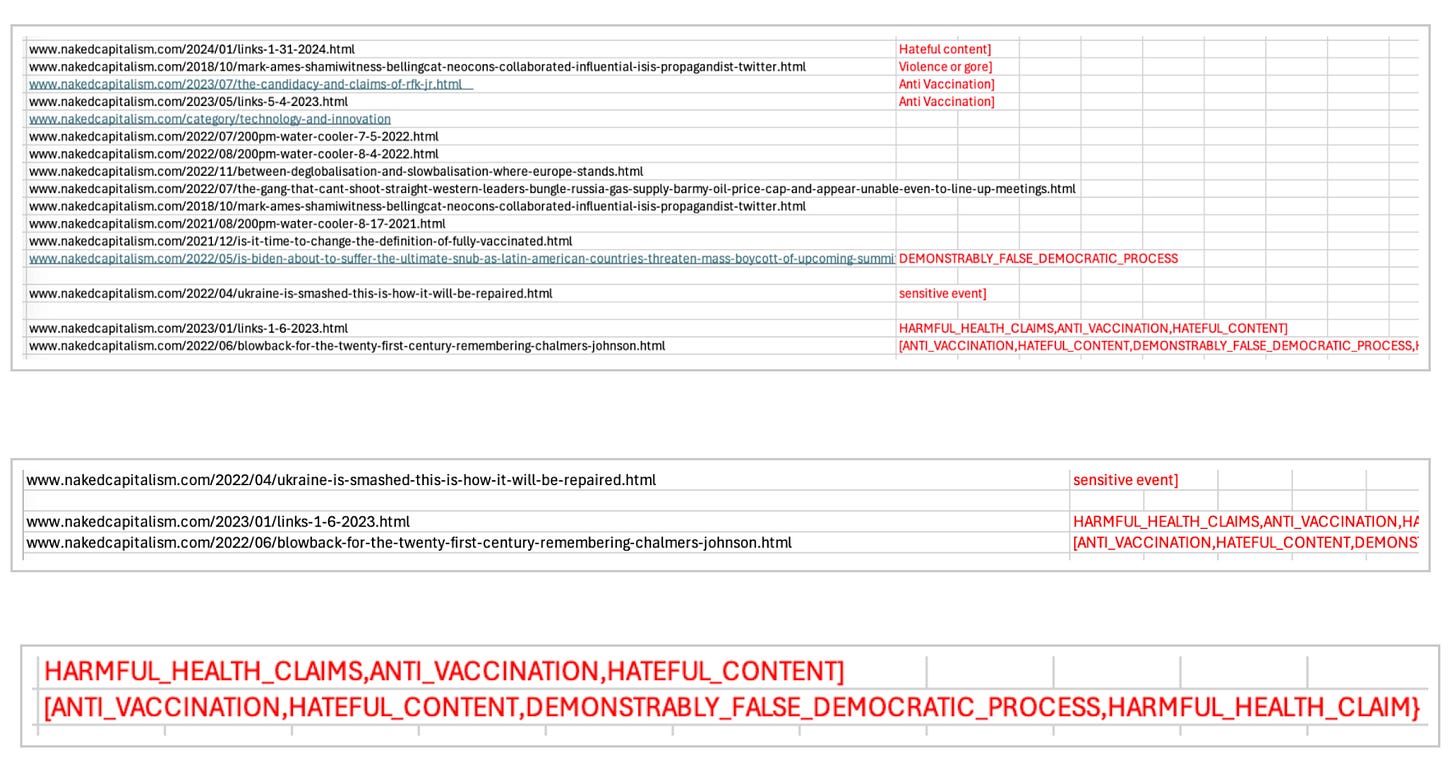

See below for the spreadsheet that shows which posts Google has questioned, and why.

About the final entry in the spreadsheet, which was tagged as having Harmful Health Claims, Anti-Vaccination information, and Demonstrably False Democratic Process information, Smith wrote this:

The URL is for a cross-post from Tom Engelhardt about Chalmers Johnson, Blowback for the Twenty-First Century, Remembering Chalmers Johnson. Johnson was a mild critic of US foreign policy, and has nothing whatsoever to do with health or health care policy. That creates the appearance that Google regards “anti-vaxx” as a showstopper, and is for some reason desperately applying it to this site, which is not vaccine hostile. Google has blatantly mislabeled unrelated content to try to make that bogus charge.

Anyone who knows Chalmers Johnson’s work knows that Blowback is not about vaccines. If you search the post for “vaccination,” you would find a reference — but it’s in the comments.

In a later post on this subject (March 21), Smith wrote:

We consulted several experts. All are confident that Google relied on algorithms to single out these posts. As we will explain, they also stressed that whatever Google is doing here, it is not for advertisers.

Given the gravity of Google’s threat, it is shocking that the AI results are plainly and systematically flawed. The algos did not even accurately identify unique posts that had advertising on them, which is presumably the first screen in this process. Google actually fingered only 14 posts in its spreadsheet, and not 16 as shown, for a false positive rate merely on identifying posts accurately, of 12.5%.

Those 14 posts are out of 33,000 over the history of the site and approximately 20,000 over the time frame Google apparently used, 2018 to now. So we are faced with an ad embargo over posts that at best are less than 0.1% of our total content.

And of those 14, Google stated objections for only 8. Of those 8, nearly all, as we will explain, look nonsensical on their face.

She adds:

For those new to Naked Capitalism, the publication is regularly included in lists of best economics and finance websites. Our content, including comments, is archived every month by the Library of Congress. Our major original reporting has been picked up by major publications, including the Wall Street Journal, the Financial Times, Bloomberg, and the New York Times. We have articles included by academic databases such as the Proquest news database. Our comments section has been taught as a case study of reader engagement in the Sulzberger Program at the Columbia School of Journalism, a year-long course for mid-career media professionals.

So it seems peculiar with this site having a reputation for high-caliber analysis and original reporting, and a “best of the Web” level comments section, for Google to single us out for potentially its most extreme punishment after not voicing any objection since we started running ads, more than 16 years ago.

There’s a full exegesis (in this case, a takedown) from Smith of the massive and numerous errors in this brainless AI sweep through early and recent posts in the March 21 response. Please do read it. I’m shocked by what she discovered.

About Those Covid Complaints

Those of you worried about the spread of vaxx misinformation (more about that later perhaps) may wonder about the Harmful Health Information and Anti-Vaccination flags. Here’s Matt Taibbi’s analysis of the posts flagged that way (emphasis mine):

A Barnard College professor, Rajiv Sethi, evaluated Robert F. Kennedy’s candidacy and wrote, “The claim… is not that the vaccine is ineffective in preventing death from COVID-19, but that these reduced risks are outweighed by an increased risk of death from other factors. I believe that the claim is false (for reasons discussed below), but it is not outrageous.” This earned the judgment, “[AntiVaccination].” Sethi wrote his own explanation here, but this is a common feature of moderation machines; they can’t distinguish between advocacy and criticism.

A link to “Evaluation of Waning of SARS-CoV-2 Vaccine–Induced Immunity” a peer-reviewed article by the Journal of the American Medical Association, was deemed “[AntiVaccination].”

An entry critical of vaccine mandates, which linked to the American Journal of Public Health article SARS-CoV-2 Infection, Hospitalization, and Death in Vaccinated and Infected Individuals by Age Groups in Indiana, 2021‒2022, earned [HARMFUL_HEALTH_CLAIMS, ANTI_VACCINATION, HATEFUL_CONTENT] tag.

So the AI bot tagged these pieces as anti-vaxx: a pro-vaxx post, a peer-reviewed article from the Journal of the AMA, and an article from the American Journal of Public Health. That’s a pretty dumb AI bot they’re growing in there.

What’s Going on Here?

Taibbi makes the point that most will make — that this is a well-meant effort gone badly wrong, thanks to clumsy AI:

Technologists are in love with new AI tools, but they don’t always know how they work. Machines may be given a review task and access to data, but how the task is achieved is sometimes mysterious. In the case of Naked Capitalism, a site where even comments are monitored in an effort to pre-empt exactly these sorts of accusations, it’s only occasionally clear how or why Google came to tie certain content to categories like “Violent Extremism.” Worse, the company may be tasking its review bots with politically charged instructions even in the absence of complaints from advertisers.

But why is Google doing this at all? Why is this sort of thing happening?

Taibbi’s partial answer: “Companies (and governments) have learned that the best way to control content is by attacking revenue sources, either through NewsGuard- or GDI-style “nutrition” or “dynamic exclusion” lists, or advertiser boycotts.”

The rest of that paragraph talks about AI’s dumbness and the lack of human involvement in the process. But what is the process about? Taibbi hits it above without expanding further:

“The best way to control content.”

You have to admit, whether you’re on the strongly-for-free-speech side, or the side that says “We can’t trust people not to fall for more Trump,” the crux of the issue revolves around control.

So to answer our headline question, “What’s next?” — more control. These people will never want less.

That TikTok Law

Speaking of control, here’s a highlighted version of the House-passed TikTok law, the well-named RESTRICT Act:

Note, at the top, that websites are subject to this law, not just one app. Lots of things are websites. You’re reading a website. This isn’t just about TikTok and China.

Now consider the language “controlled by a foreign adversary.” Taibbi’s comment: “A ‘foreign adversary controlled application’ … can be any company founded or run by someone living at the wrong foreign address, or containing a small minority ownership stake. Or it can be any company run by someone ‘subject to the direction’ of either of those entities. Or, it’s anything the president says it is. Vague enough?”

‘Collect it all, tag it, store it’

Is this a cynical view of the TikTok law? Of course it is. Perhaps that’s not your view, but if it’s not, I refer you to Bush II years, when the NatSec state used the then-latest greatest fear to disguise a similar power grab.

Here’s what they did in response to the Twin Towers attack:

About that NatSec response, the Post wrote this, and meant it as praise, not blame (emphasis mine):

"Rather than look for a single needle in the haystack, [NSA director, Gen. Keith Alexander’s] approach was, 'Let's collect the whole haystack,' " said one former senior U.S. intelligence official who tracked the plan's implementation. "Collect it all, tag it, store it. . . . And whatever it is you want, you go searching for it."

The bones of that earlier response has grown meat and fur. So where are we today? More free? More secure? Or considerably less of both?

I believe in free speech. I belong to the American Civil Liberties Union (ACLU) and still to this day support the decision to grant Nazis the right to march in Skokie, Illinois. (Sadly, the current iteration of the ACLU probably would not.)

So like Taibbi, I am a free speech absolutist.

To label "Naked Capitalism" (NC) as a purveyor of misinformation especially in regards to the topic of COVID is an outrage. Yves Smith and Lambert Strether articulate daily the dangers of this pandemic and make their readers fully aware that the risk of infection is not over. The site further validates their views with articles and comments from experts in epidemiology, immunology, virology and currently practicing physicians.

To read the comments on Substack that accompany Taibbi's article can help one understand the nuance of my views that follow.

Some commentators think that NC belongs on Substack. Well, Smith and Strether both say, "if your business depends upon a platform, you do not have a business."

A lot of commentators view NC's stance on COVID as unfounded hysteria. Sadly, COVID "experts" that Taibbi defends as victims of shadow banning would fall in line with those views. Taibbi openly questions the efficacy of masks and the concept of lockdowns to quelch this pandemic. (We had a pathetic attempt at a lockdown which was implemented too late, with too little income support, was too limited in scope and too short in duration.)

What should happen for all matters public is open discourse. The government has the ability to state views. It should not have the ability to quash speech nor the inclination to order intermediaries to do the same. This inclination to direct discourse to a proscribed viewpoint is in my mind the driving force for divesting TikTok of its current owners.

This exchange of views is the foundation of a society's immunity to bullshit. Deep sixing views for not adhering to "the science" is a shameful tactic that will eventually boomerang. NC does publish science, not wishful thinking.

Just got suspended for 12 hours from Twitter for posting (and being mandated to remove) a tweet that humorously suggested that it was time to kill the rich and take all our money back.

Huge improvement under Musk. Under the old regime I was banished for over a year for linking to The Saker.

At this rate Americans may be allowed to once again have opinions some day. Not right away, but eventually. For sure.